In December, I had the privilege of sitting on a panel "Defining 'Safety' in Artificial Intelligence," with a collection of leaders in the AI impacts community. Perspectives varied, but it is clear we have reached a watershed moment for the field of AI safety. Recent events have produced an identity crisis in a field that has struggled for its proper place alongside responsible/trustworthy/ethical AI research. Focusing on three particularly impactful events, we have:

-

The Effective Altruism grantmaking community massively expanded AI safety funding with a focus on the existential risk posed by superintelligent AI systems. In one example, the FTX Future Fund funded a $100k prize pool to award academic ideas at a workshop. Such prizes are highly unusual for NeurIPS workshops -- the grant represents one of many that have reshaped the activities of a large number of academics now active in AI safety.

-

The people behind the FTX Future Fund recently resigned en masse after learning their grants were funded by either gambling with customer funds or outright theft. This punched a hole in the budgets of many AI safety organizations.

-

Motivated by disagreement with the Effective Altruism community and its funding priorities within AI safety, members of the AI ethics community are now critical of the entire AI safety enterprise. (example)

Together, these developments first pumped up and then deflated one particular aspect of AI safety (existential risk), leaving the field in a crises of both identity and financing. This is a profoundly unfortunate outcome for the safe and ethical practice of AI and lays bare a schism between the ethics and safety communities -- communities that ought to share a common cause and purpose. Reduced to their core problems, the two fields can be defined as:

- AI Ethics: deciding what should be done

- AI Safety: engineering how to do it

Both are necessary to form a healthy culture for AI research. Without safety research, the only answer AI ethics can provide is "don't do it," while safety absent AI ethics can only address those safety problems with unambiguous answers (e.g., "the system should not kill people").

AI safety is concomitant with ethics, but the two communities are innevitably bound to internecine strife. Rather than address the schism as an intellectual distinction, I believe it is more appropriate to detail the divide as one of intellectual identity and tribe. To explain, I will detail my own journey through the AI fairness, AI for Good, and AI safety communities and conclude with a potential direction to reintegrate the communities.

The Rapid Expansion of AI Ethics

I first entered the AI ethics community via my earlier open source work in the privacy movement. As a technologist, I attempted to solve the privacy problem with "Privacy Enhancing Technologies (PETs)," but this was ultimately a failing proposition -- privacy is not "solved" through technology. Privacy first and foremost is conferred by social norms formalized with public policy. Regrettably, I view the current state of electronic privacy as largely a lost cause. In fact, this failure of privacy protection has contributed to AI systems increasingly depriving people of agency. With greater privacy, there would be a reduced need for the AI ethics field.

Thankfully, AI impacts are increasingly being noticed throughout society, perhaps moreso than privacy concerns that were and still are underserved relative to their social importance. The number of practitioners of AI ethics has exploded since 2014, when I had the privilege of attending a workshop at the NeurIPS conference on the topic of Fairness, Accountability, and Transparency (FAT) in Machine Learning. While thousands of conference attendees filled workshop rooms on the topic of deep learning, all the attendees at the FAT session went to lunch together. Though we were few in number, the discussion would turn out to be prescient and the importance of our discussion was soon to be amplified by the deep learning revolution happening a few doors down.

In retrospect, 2014 was an inflection point in technology ethics -- through each year that followed, more papers, funding, and prestige flowed in, along with a growing number of researchers. The subfields of responsible and trustworthy AI, among other branches of AI ethics, were all variations on a theme. Increasingly, researchers would gain acclaim for characterizing emerging repressive AI applications. Such papers gain press coverage and make the policy world pay attention. They are activism in the form of rigorous research. I cheered the efforts believing them motivated by a desire to make better AI systems.

I would learn otherwise between the 2018 and 2019 NeurIPS workshops on AI for Social Good. As an organizer for both iterations, I found the difference between them to be striking. The 2018 edition played to a packed house with a Yo Yo Ma keynote, invited talks about all the great things AI can do, and panels that largely concentrated on the negative impacts of AI. It was a wild and enthusiastic day. Just one year later, the attendance and tenor of the workshop would change substantially.

Amazing! Yo-yo Ma performing at the workshop AI for social goods at #NeurIPS2018

— Amelie H (@Amelie_hel) December 8, 2018

I loved it ! And great discussion after on art and AI.

Thank you so much!@YoYo_Ma #feelinglucky #cello #AIforsocialgood pic.twitter.com/EH1oVNOBi6

AI for Good and Effective Altruism

Where the 2018 workshop felt like a unified community motivated by social impacts (good and bad), the 2019 edition had multiple moments where the people working for "good" outcomes were explicitly told to stop their efforts. AI in totality had become the enemy and there was no place for AI as a solution. Enter the Effective Altruists, who believe AI can be the solution to all problems -- perfect foils for an anti-AI community.

The core project of Effective Altruism is to "identify the world’s most pressing problems and the best solutions to them." This is a natural fit for the "AI for Good" movement, but early Effective Altruist leaders came out of Oxford University, which also produced the leading thinkers in the existential risk problem. The hybridization of these two movements birthed an extremely well-funded push to produce "good" superintelligent AI systems. After all, a superintelligent AI system would be able to solve everything on the way to abundance and prosperity for everyone! Conversely, it is of immense importance to prevent the production of a "bad" superintelligent system that can destroy us all. From here, Effective Altruism hooked into the then-small subfield of AI safety and pumped it with funding. AI safety went from a field concerned with current and foreseeable AI safety to one dominated by risks that have yet to materialize. It is at this point that the AI ethics community began equating AI safety with existential risk: that is to say, the only good superintelligent AI is a non-existent one.

AI Safety, Ethics, and Existential Risk

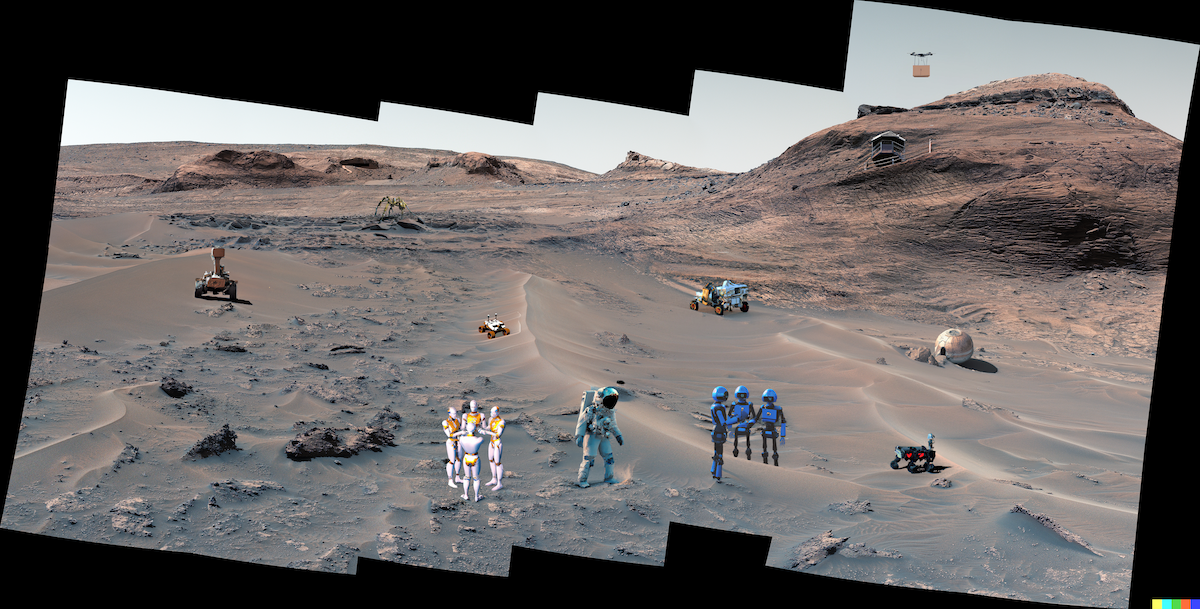

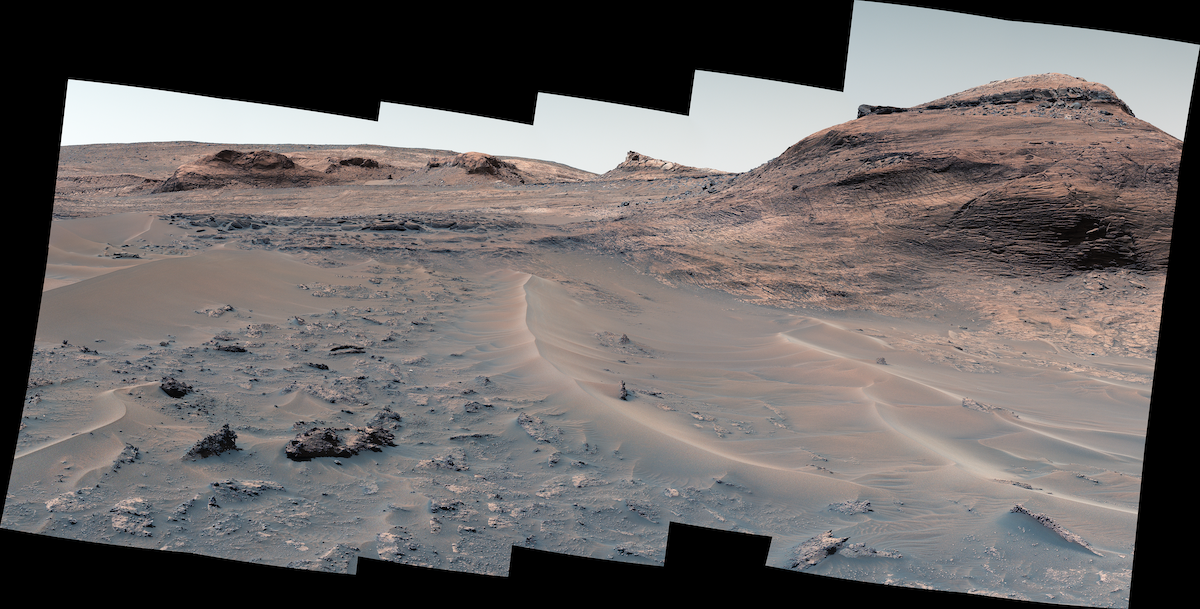

Andrew Ng drew an analogy between worrying about superintelligent AI and worrying about overpopulating Mars,

If we colonize Mars, there could be too many people there, which would be a serious pressing issue. But there's no point working on it right now, and that's why I can’t productively work on not turning AI evil. (source).

Stuart Russell then responded,

... if the whole world were engaged in a project to move the human race to Mars wouldn't it make sense to us [to ask] what are we going to breathe when we get there...that's exactly what people are working on right there. What is the carrying capacity of Mars? Zero, right? So if you put one person on Mars its overpopulated so the people who are working on creating a Mars colony are precisely worrying about overpopulation. They want to create life support systems so that the Mars carrying capacity is more than zero and that's what we're doing. We're saying if we succeed we're going to need to be able to know how to control these systems otherwise we'll have a catastrophe (source)

To the AI ethicist, the problem is that an awfully large number of people are spending their time arguing about Mars and not addressing the problems being produced on Earth today. Further, much of the AI ethics community seems to reject the "Martian project" altogether. Where AI researchers have spent 50+ years building towards artificial general intelligence, the AI ethics community reacts in horror at the spectre of approaching success. And why work to make something safe that you don't believe should exist at all? As a result, ethicists view the AI safety community as part of the hazard of AI itself or at least to be contributing to solving the wrong problems.

The root of the ethics/safety schism is a disagreement about the collective goal of the field.

What are the consequences of this disagreement? We may see a policy response for the massive uptick in the number of indexed AI incidents, but without integrating ethics and safety we will be incapable of producing systems that will avoid the incident ledger altogether.

The Alignment Problem

Both the AI Safety and AI Ethics communities can agree on the necessity of understanding how to identify the interests of stakeholders and ensure those interests are upheld throughout the production and deployment of intelligent systems. The core project of AI safety can be described as seeking to align intelligent system decision making with the goals and interests of the systems makers (i.e., a company or humanity more generally). The extreme form of the alignment problem is produced by superintelligent systems that could kill all of humanity when improperly designed. In such cases, there is little an ethicist has to offer -- researchers don't need to be told everyone dying is a bad thing. However, we already "live on Mars" and it is already overpopulated. Intelligent systems today are very poorly aligned to the aims of their makers and the society exposed to them. Ethicists can provide perspective on what constitutes alignment and processes for defining and measuring it. Safety researchers can solve the scientific and engineering problems of achieving ethical alignment in real systems. The wealth of circumstances, applications, stakeholders, and outcomes requires a feedback loop between characterizing harms and efforts to mitigate or prevent those harms.

Regardless of your viewpoint on the impacts and engineering of AI systems, the scale of the problem over the next century is likely to far exceed the community as it currently exists. I recommend anyone looking to make a career in bettering the world consider working on the social impacts of AI in either the AI ethics or AI safety communities. I hope we will have an opportunity to work together from both perspectives.

We need integrated ethics and safety research communities.