- Published on

Unit Testing Learned Algorithms for Social Good

- Authors

- Name

- Sean McGregor

- @seanmcgregor

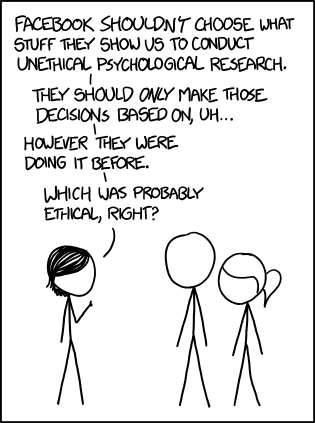

I attended a talk at Open Source Bridge titled "Open Source is Not Enough: The Importance of Algorithm Transparency". The TLDR of the presentation is that algorithms shape your view of the world which can lead to socially bad outcomes. Think filter bubbles, search manipulation, etc.

The presenter's prescription was to make the algorithm choices available to user inspection. While this prescription is useful for certain types of algorithms (greedy, tree search, linear programs), this is not the case for algorithms whose definition is dependent on the data used to "train" them (machine learning). Simply put, the nature of machine learning algorithms are too dynamic to succinctly characterize for users. Since data-derived algorithms are increasingly used in socially-critical applications like credit rating systems, we need a way to ensure these algorithms do not produce socially adverse outcomes.

My prescription: Social Unit Testing!

I'll explain through example. Let's say we don't want an algorithm to be racist. We can test the credit reporting algorithm for racism by generating many pairs of random datapoints in which the only intrapair difference is the race of the individual. Now if any of the pairs produce a difference in credit rating we can conclude that the algorithm is racist.

This testing methodology can be extended to characterize all the "social bads" and incorporated into a social testing framework. It is friendly to continuous integration and is indifferent to the chosen algorithm so you can swap a decision forest in for a neural network and you don't need to rewrite your tests. With a complete suite of "social good tests," the Googles of the world could report their social testing results without needing to explain or disclose their search algorithms.